On June 24, 2025, the cybersecurity world was shaken by the revelation of two critical vulnerabilities in a widely used large language model framework. These vulnerabilities, classified as CVE-2025–23264 and CVE-2025–23265, were discovered in versions of the framework prior to 0.12.0. The flaws, identified as code injection weaknesses, could allow attackers to execute arbitrary code, escalate privileges, and gain access to sensitive information.

This breach underscores a crucial reality: even frameworks designed for cutting-edge AI applications are vulnerable to attacks if security gaps remain unaddressed. So, what went wrong? How were these vulnerabilities introduced, and more importantly, how can you ensure that your AI systems remain secure?

🔍 What Exactly Happened?

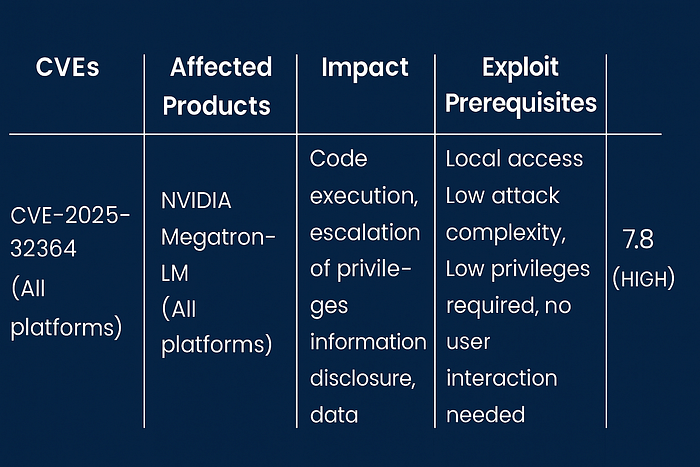

The vulnerabilities stemmed from issues within the Python components of the framework, which handled file ingestion improperly, allowing attackers to inject malicious code. The flaws carried a CVSS v3.1 score of 7.8, indicating high severity.

Key Details:

- Vulnerabilities: Code execution, privilege escalation, and information disclosure.

- Exploits: Malicious files crafted to exploit system weaknesses, requiring local access with minimal privileges.

- Impact: Potential remote code execution, tampering with data, and exposure of confidential system data.

The flaws were identified and reported by security researchers, who also collaborated with the framework’s security team to mitigate the risks. It was clear that this was not a random vulnerability — it was an oversight in a crucial aspect of system design, specifically in how files were ingested into the system.

🛡 How Did This Happen?

This breach was largely due to insufficient validation in the file ingestion process within the framework. The lack of checks allowed an attacker to upload specially crafted files, which, when processed, could trigger arbitrary code execution.

While this incident highlights the importance of secure code practices, it also serves as a reminder that even established and heavily used frameworks can have vulnerabilities that aren’t immediately evident. The security of a system relies not just on its core functionality but on how well its components handle edge cases — like file input, user permissions, and data processing.

⚡ What Can Be Done to Prevent This in the Future?

For those who rely on similar AI frameworks, this incident serves as an urgent call to action. Here’s what can be done to mitigate such risks in your own systems:

- Regular Patching & Updates: Always ensure that you are using the latest version of the framework. In this case, upgrading to version 0.12.1 effectively patched these vulnerabilities.

- Secure File Ingestion: Implement strict validation and sanitization of all files ingested by the system to prevent malicious code injection.

- Access Control: Restrict execution permissions for critical components and apply least privilege principles.

- Continuous Monitoring: Employ real-time security monitoring for unusual behavior, particularly when files are being processed or executed.

These simple, yet effective, measures can significantly reduce the risk of similar breaches in the future.

Key Takeaways:

This incident is a stark reminder that AI frameworks, no matter how robust, can harbor vulnerabilities that can expose your organization to significant risks. Vendor trust is not enough — you must actively ensure that your security posture is proactive, continuous, and layered.

Are You Prepared to Respond to Vulnerabilities in Your AI Frameworks?

At Finstein, we specialize in securing AI frameworks and other critical systems within your organization. We can help you:

- Assess security risks in third-party AI systems

- Implement secure coding practices and best practices for data handling

- Establish continuous monitoring and incident response protocols to mitigate vulnerabilities

Is your security framework ready for the future?

Schedule a call with Finstein today.

📧 Email: Praveen@Finstein.ai

📞 Phone: +91 99400 16037

🌐 Website: www.cyber.finstein.ai